Hello! Thanks for reading The Smarter Image. I appreciate your attention. If you want to help support this newsletter, please recommend it to a friend. Thank you!

First Things

Want to learn about AI/ML features in Lightroom? I’m doing a free online webinar for Rocky Nook in support of my book Adobe Lightroom: A Complete Course and Compendium of Features on December 21 at 11:00 AM PST: How Smart Is Lightroom? AI-Powered Photo Editing with Jeff Carlson.

Speaking of speaking, in January I’m doing an in-person class at Kenmore Camera in Kenmore, WA called Creative (and Painless!) Photo Editing in Lightroom – LIVE w/ Jeff Carlson: Saturday, January 13, 2024 · 9am – 12pm PST. This would make a great holiday gift for the Seattle-area photographer in your life!

One of the best parts of doing live events is the interaction, so bring your questions!

Luminar Neo Gets into the Generative Game

When Adobe released Firefly and—more significantly—incorporated Generative AI into Photoshop, I think the technology really started to hit home for photographers. Yes, DALL-E and Midjourney and others were already out there, but they weren’t really photography tools. Photographers saw them as an intriguing toy to experiment with or as a potential threat, but not as photography.

Firefly gave the often-complex process of creating GenAI imagery from text prompts a friendlier interface, even though the results were not as good as other tools were creating. And it was free while in beta.

But incorporating Firefly into Photoshop turned the technology into a tool. Sure, you could quickly composite a (sometimes janky) dragon into one of your photos, but you could also select a large distracting object and have Photoshop seamlessly remove it. After years of cloning and stamping, and then wielding the magic of Content-Aware Fill that did wonders on small areas, now we had the ability to quickly remove things and have them replaced by realistic facsimiles of what existed in their place.

My point isn’t to lionize Adobe here, but to spotlight the impact the company has made—and continues to make—for everyday photographers who otherwise wouldn’t encounter GenAI except on social media and in the news. For eight months, Photoshop was the only major editor to incorporate GenAI in this way, giving it a significant head start.

But now it’s not the only one. I’ve been curious to see which company would start to add generative tools to their photo editing software, and now we have an answer: Skylum, in the latest version of Luminar Neo.

Next-Gen Neo

I continue to maintain that the biggest benefit photographers get from AI/ML is in service of boosting editing tools. Creating digital artworks from scratch is a fine pursuit, but photographers still want to create photography using cameras.

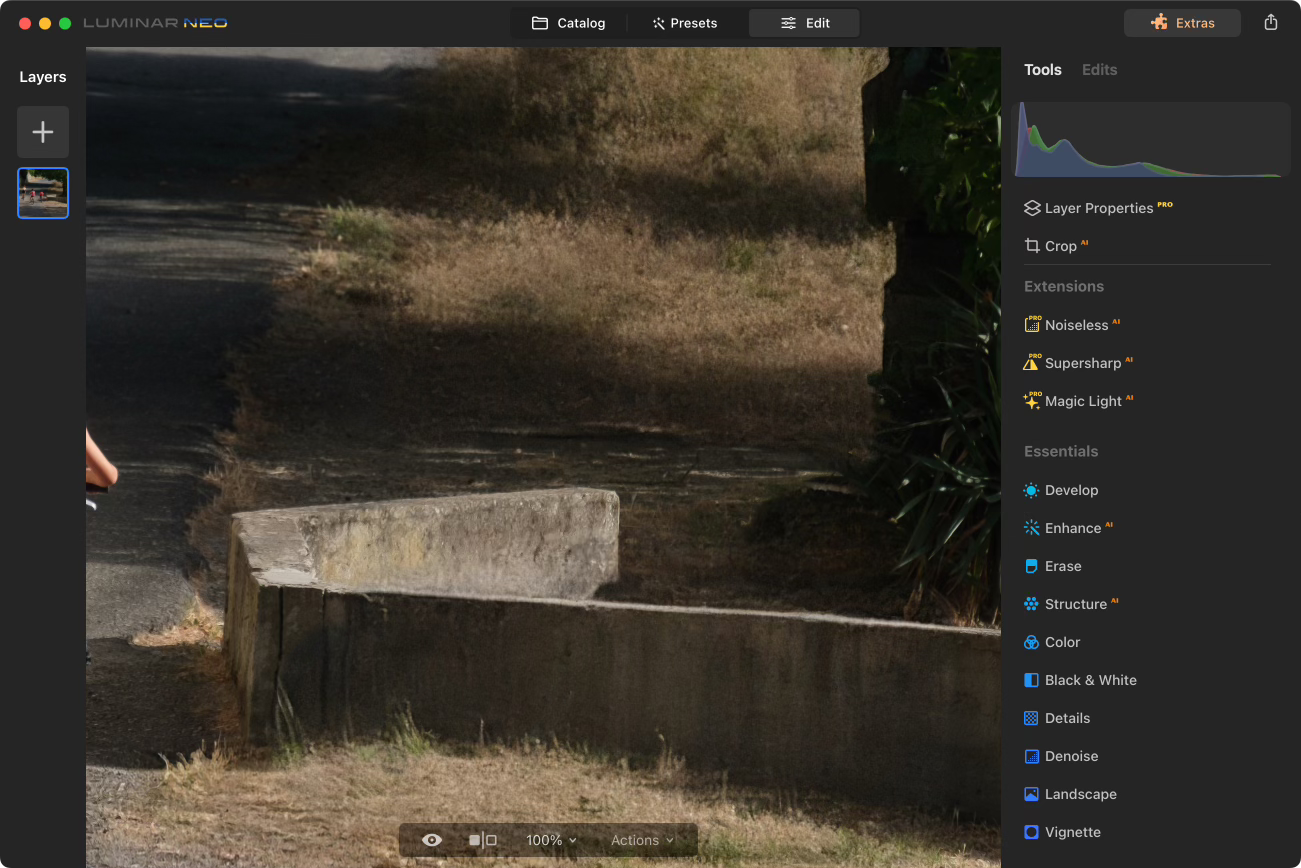

So it makes sense that Luminar’s first Generative AI features are GenErase, which removes areas, and GenSwap, which fills in areas based on text descriptions. GenSwap is admittedly not a great name—it implies I’m switching one area of an image with another area—but I’m guessing “GenFill” would have invited stern letters from Adobe’s lawyers as being too close to the Generative Fill feature in Photoshop. Skylum has also announced GenExpand, a tool for expanding the canvas of a photo and filling in the new areas.

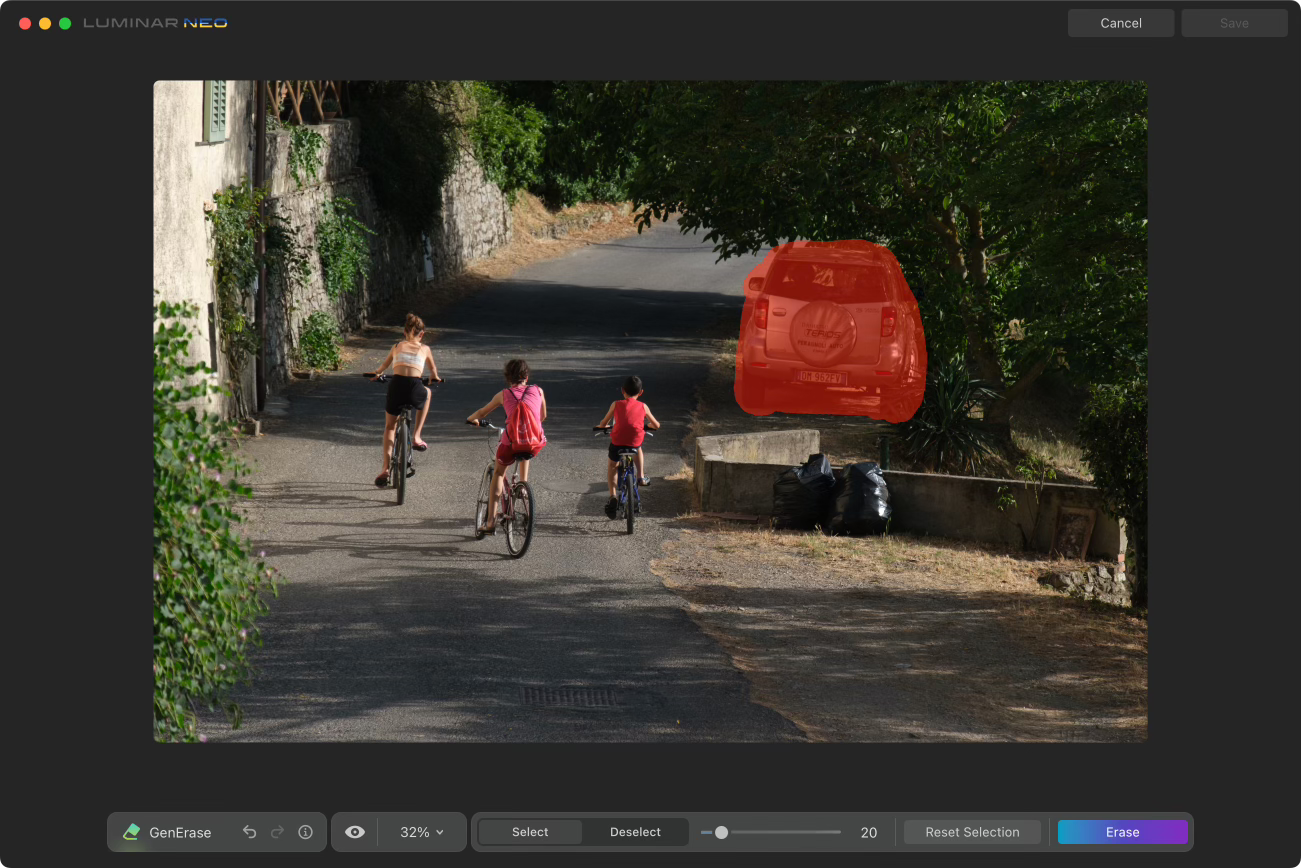

GenErase and GenSwap appear in a new Generative Tools section of the right-hand sidebar as separate tiles, much as the add-on Extensions show up. They aren’t part of the Edit interface, where you adjust other aspects of a photo, but rather exist as separate tools. Using them is straightforward: select an image, click the feature (such as GenErase) and then paint over the area you wish to edit. In this example, I want to remove a car parked at the side of the road.

(Eagle-eyed readers will notice that I’m using the same example image from when I wrote about Generative Fill in Photoshop.)

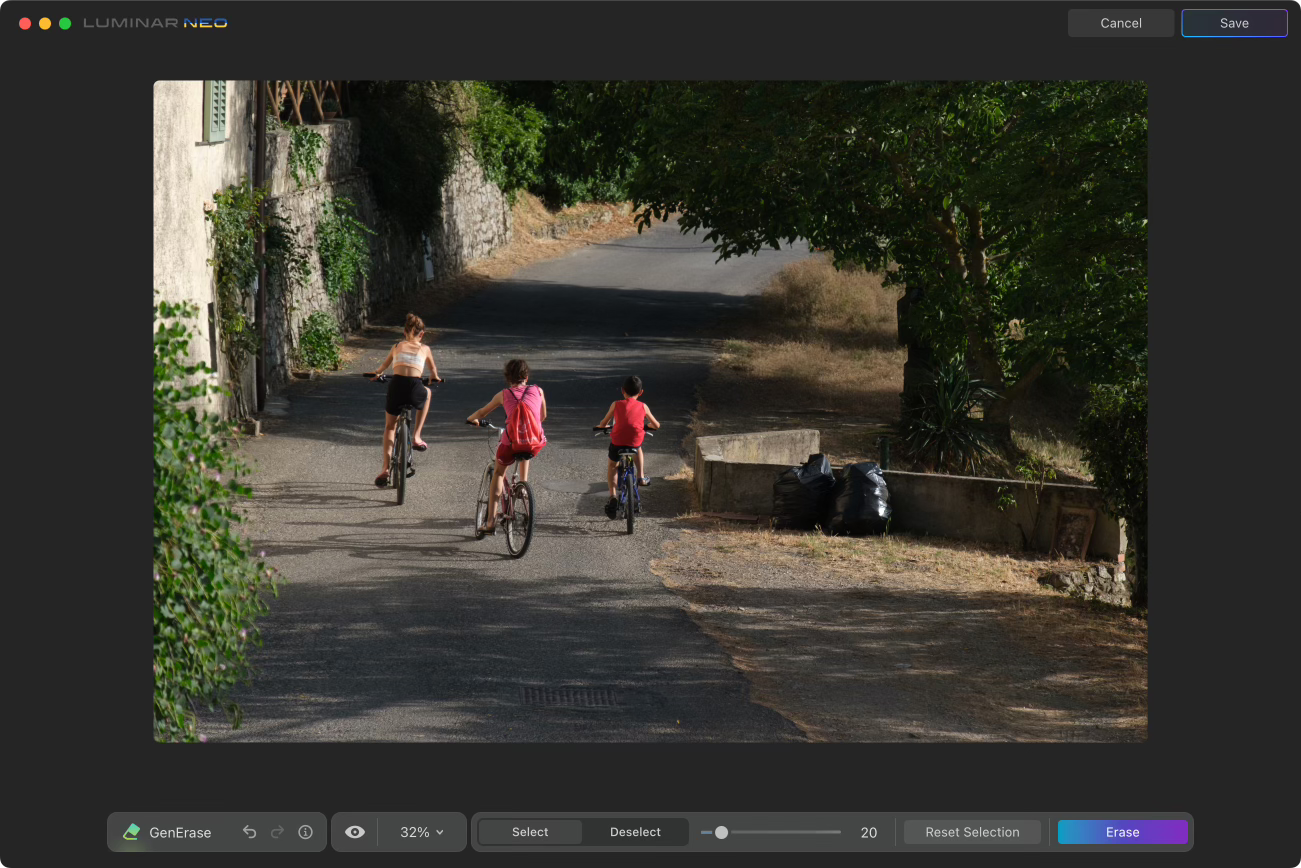

When you click Erase, the app processes the image in the cloud and then displays the result. Unlike Photoshop, which gives you three options to choose from, Luminar presents one result. If you’re not happy with that, click Erase again and see what you get. You can also reset the selection and erase another area if there’s more cleanup to do.

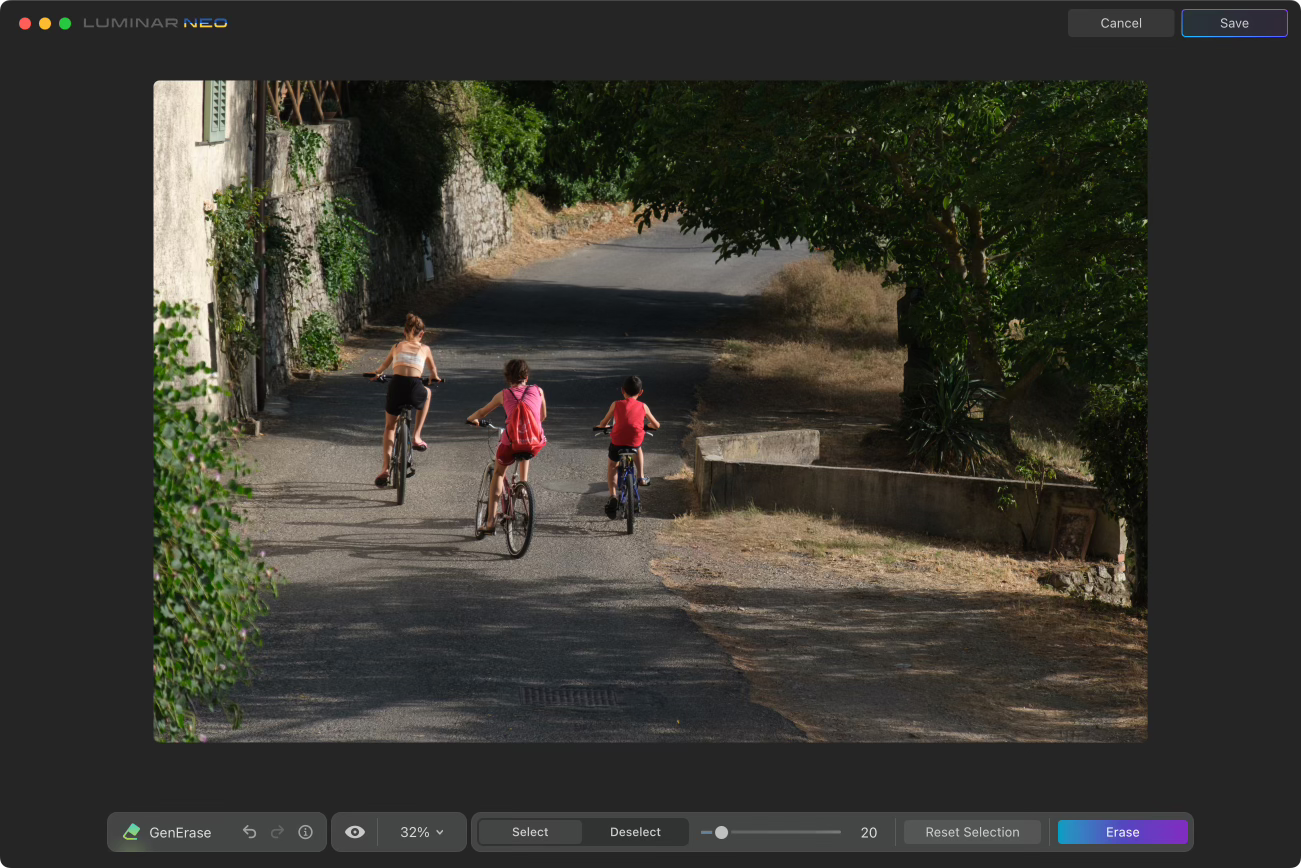

In my testing, the generative results were pretty good (as shown above). Sometimes it takes several passes over an area, like the stone wall where I removed the garbage bags:

One of the gotchas of generative fill/erase tools has been resolution: depending on the source image, Photoshop’s Generative Fill replacements are sometimes noticeably lower resolution than the surrounding area. That’s because the image generators all work at low res to optimize the processing time and minimize computational load.

Skylum told me that Neo is upscaling the generated content from the low-resolution source in order to improve the quality. In this example, I can still see artifacts at 100% view. When viewed in most circumstances (ie, online, on devices, and zoomed to fit), the quality won’t be noticed by most people.

Unlike Photoshop, Luminar is not creating generative layers. Instead, you’re working on a new version of the image: when you’re satisfied with the results, you click Save to create a separate TIFF file that incorporates the edits. From a strict standpoint, Luminar is working non-destructively, because the original image isn’t being edited. However, each generative tool session creates a new file. If you want to go back in and erase something you missed before clicking Save, you need to do that in the edited TIFF…which in turn creates a new file.

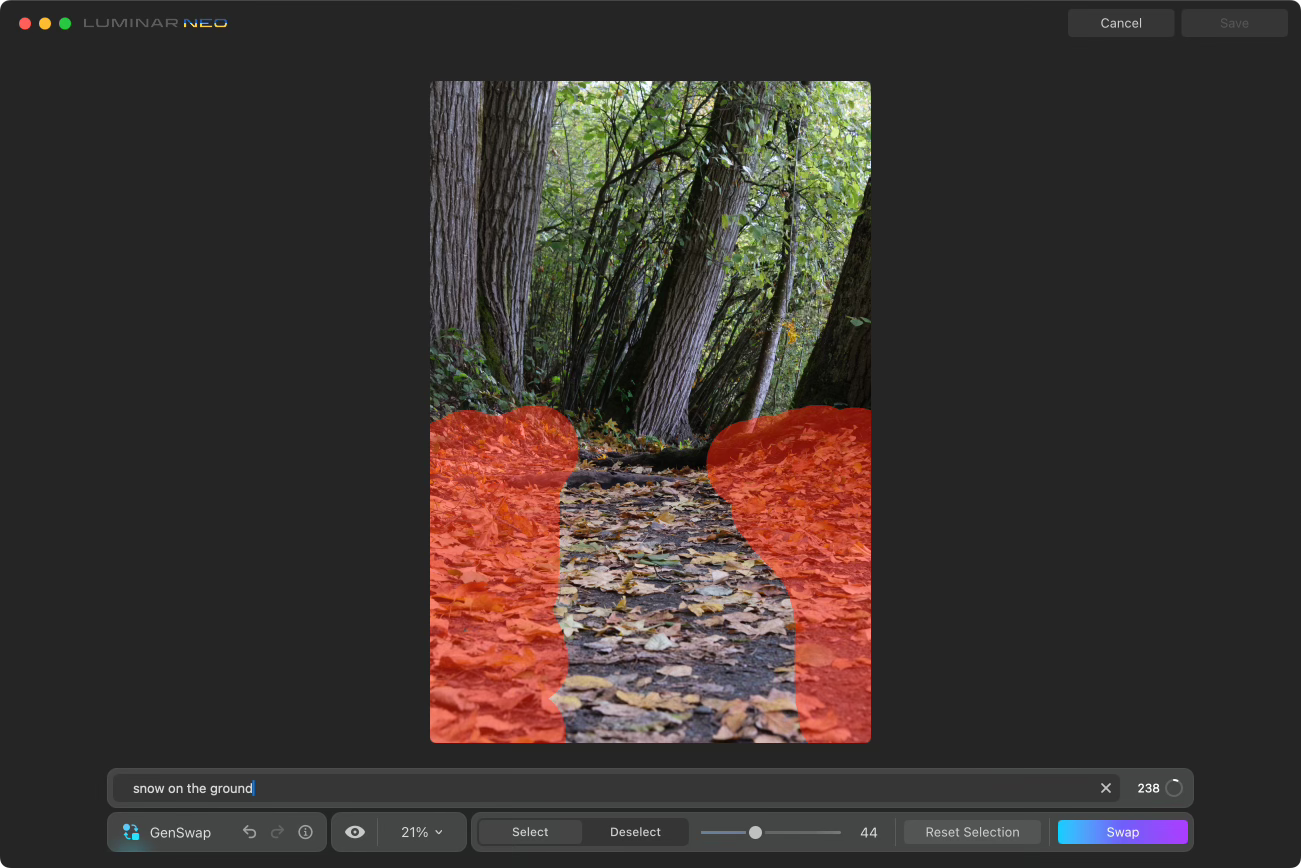

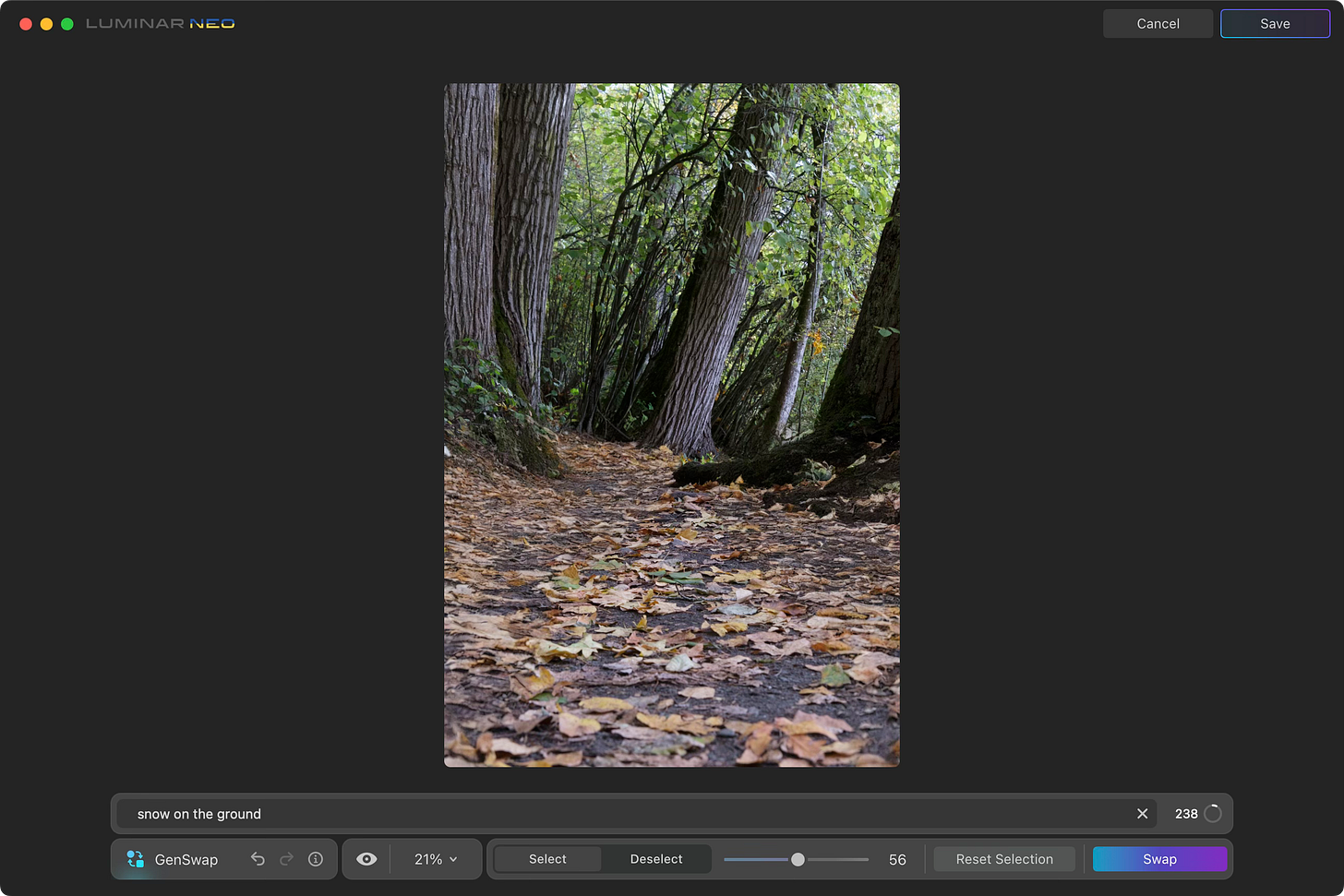

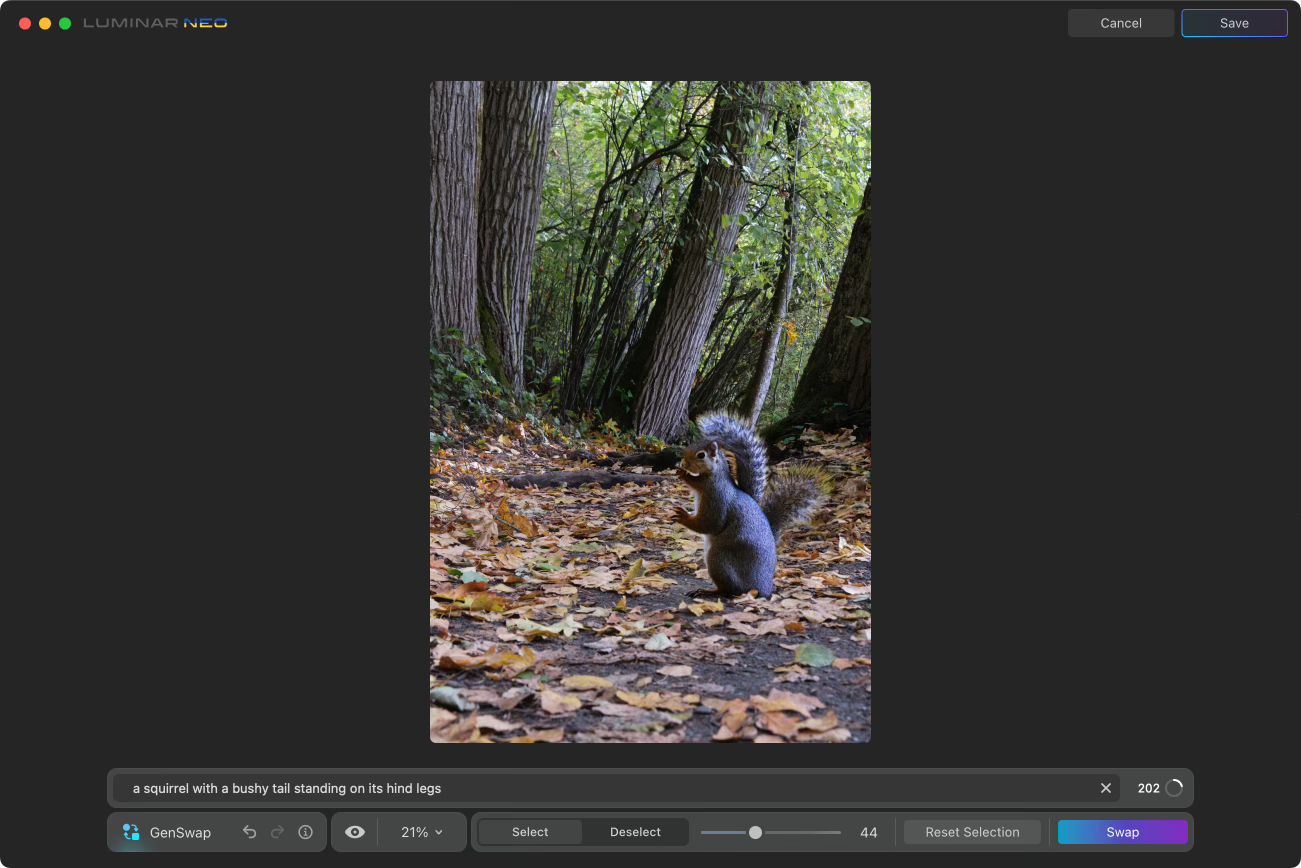

GenSwap works in a similar way: choose a photo, click the GenSwap button, and paint the areas you wish to change. Then, type a description in the field that appears of what you’d like to see and click Swap.

As with all generative tools so far, you won’t know exactly what you’re going to get until the app finishes processing. Expect to spend time waiting for results and then tailoring your text to better describe what you want. Sometimes it just refused to generate what I asked for: multiple combinations of “snow,” “snow lining the path,” “thick melting snow” in the following image didn’t generate a lick of precipitation.

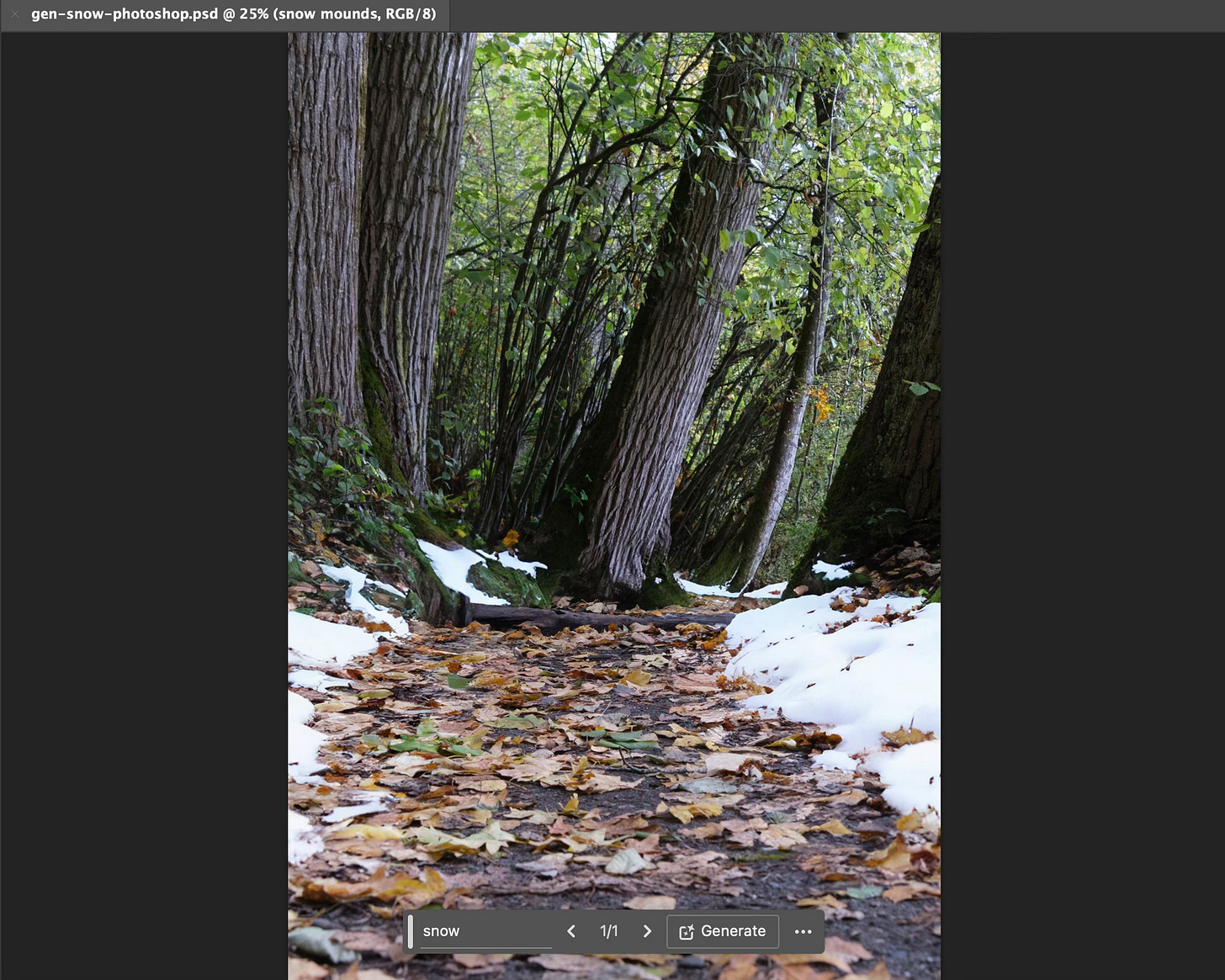

To compare, this was the same result in Photoshop:

When I got more fanciful, the results at least gave me something close to what I was looking for. But really it’s a crapshoot.

Generative AI Is Hard

I would say the Generative AI features in Luminar Neo are a promising start. They’re not particularly fast, and getting just one result per generation is frustrating—but that’s probably because I’m now accustomed to choosing from three in Photoshop. I don’t know where Skylum is sourcing its image generation; they told me that the data is built on “open source data sets,” and that the company is not storing any input or output. They’re also not using customer images as part of a learning process to improve the models. Skylum is also not charging extra for the image generations.

Whether the quality of the GenErase results is acceptable, that of course depends on what’s being erased and where you’re intending the edited image to end up. For the most part, it’s still a work in progress, particularly GenSwap.

Further

I’m thrilled to still be on the iPhone 15 beat at DPReview, where I’ve recently published two Sample Galleries. They’re a fair bit of work to assemble, I’ll admit, but I love that DPReview publishes plenty of images so readers get a sense of what cameras and lenses are capable of producing.

Check them out here:

iPhone 15 Pro sample gallery: Do its high-res photos measure up?

iPhone 15 Plus sample gallery: Does resolution compensate for fewer photo options?

One more thing: Is “promptography” going to be a thing? Becca Farsace at The Verge went to Berlin to talk to Boris Eldagsen, whose AI-generated photo won a prominent photography competition before he revealed that it was synthetic. This video, Is AI the end of photography as we know it?, is a fascinating snippet of an entirely different way to make photos.

Let’s Connect

Thanks again for reading and recommending The Smarter Image to others who would be interested. Send any questions, tips, or suggestions for what you’d like to see covered at jeff@jeffcarlson.com. Are these emails too long? Too short? Let me know.