Generative Fill Hands-On and Apple VisionPro Hands-Off

The future is now, but it's also coming next year.

I wanted to wait for this edition of the newsletter until my newest article for DPReview was published, which went up yesterday (see below). That was also the day that Apple announced the VisionPro AR headset, which has all sorts of interesting implications (and a hefty price tag). So here we go! Thanks as always for being a subscriber, and if you haven’t yet, consider becoming a paid subscriber to support my work for as little as $4.17 per month. Thanks!

First Thing

Apple announced an entirely new product, prompting a spiral of “what does it mean?” conjecture. Fortunately, in terms of photography, there’s not a whole lot to say yet about the VisionPro augmented reality (AR) headset that will be sold next year starting at $3500.

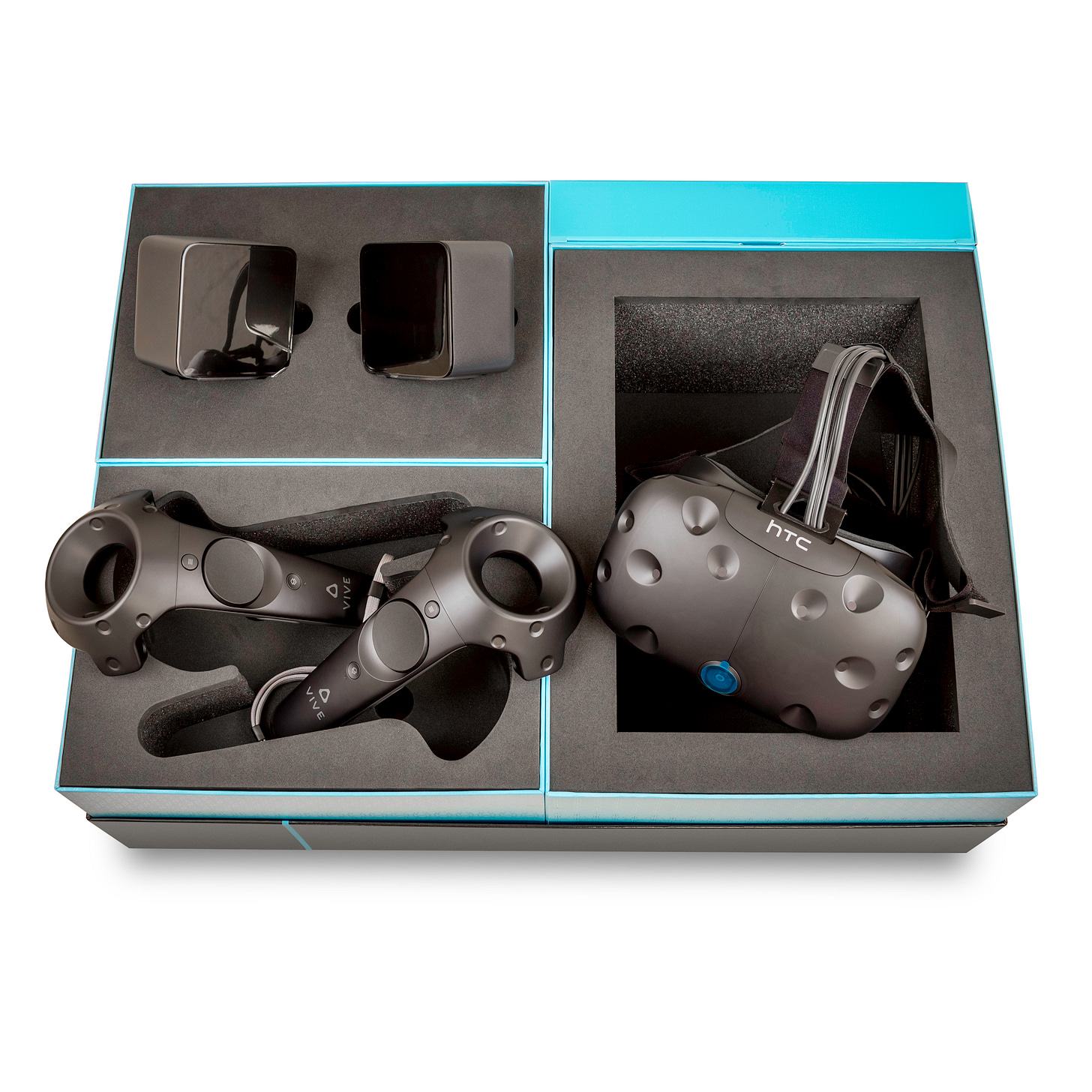

But what we know so far is actually pretty cool. In general, the technology packed into this thing is impressive as hell. Back in 2015 I worked adjacent to (meaning, I shared the same space with) the team developing the original HTC Vive VR system, so I’ve seen first-hand how difficult this stuff is.

Based on reports from a handful of people at the announcement at Apple’s Worldwide Developer Conference who’ve actually spent time using the VisionPro prototypes, the display technology is better than anything currently on the market, the eye-tracking system for selecting virtual items is responsive and accurate, and the control using just fingers (no controllers) works well.

But let’s concentrate on the photographic side of things, which incorporates two items. First, assuming the image quality holds up, I’m looking forward to being able to view photos as large floating screens, or in the case of panoramas, wrap-around immersive views. Adobe Lightroom also made a quick appearance as an example of editing photos in a third-party app.

The other notable bit is being able to record videos and photos from the headset itself, which Apple is touting as a way to create immersive 3D scenes. Which sounds great! Until you pull back and see that you’re wearing ski goggles over your face to do it. In the video about VisionPro, a pair of children are playing and don’t seem phased at all by the fact that their dad looks like an astronaut while recording them.

It looks weird now, but maybe it’ll blend in just the way we tolerate raised cell phones and traditional cameras. But it looks like an interesting application of technology that hasn’t taken into account the social ramifications.

There’s a lot of AI and ML at work here, from evaluating the scene in front of you to a novel and possible weird FaceTime experience: When video conferencing with others, it’s great that you can see them in floating windows, but do they want to interact with your goggled self? No. So instead, the VisionPro creates an avatar of your face that responds to your facial expressions in real time, making it look to the other participants that you’re there bare-faced. In the video, the experience does dip into the Uncanny Valley, but… it’s not terrible? We’ll have to see how the technology develops over the next year.

A couple of other announcements were noteworthy from WWDC, including an updated Mac Studio with the M2 Max and M2 Ultra processors. The Mac Studio is quickly becoming the workhorse Mac for creatives, so if you need a new machine for photo and video editing, it would be a great option. Apple also announced, finally, a Mac Pro running Apple Silicon processors, the M2 Ultra, with the expansion capabilities that the only tower Mac offers.

But I think the most appealing new Mac for many people will be the 15-inch MacBook Air: still slim, powered by the M2 processor, with a larger screen.

WWDC is also where Apple reveals details about its forthcoming operating systems. The only mention regarding the Photos app is the ability to recognize pets as well as people, which is great for animal lovers. From the AI/ML standpoint, another interesting feature is a mode that lets you impose yourself into video calls, such as talking in front of a document shared with others. The app mmhmm has been doing this for the past couple of years.

Hands-On With Generative Fill in DPReview

I wrote last time about the new Generative Fill feature in the public beta of Photoshop. Now I’m happy to point to an article in DPReview that goes into more detail about what the feature does: Generative Fill in Photoshop (Beta) Hands On.

I also had fun making a supercut of YouTubers’ first reactions to the technology.

I even jumped on a fad by taking the cover of Taylor Swift’s Evermore album and using Generative Fill to create an “expanded” version (the image at the top of this newsletter). Using the ability to set the crop edges outside the original image boundaries, it’s fun to see what Generative Fill comes up with to expand the scene. The process caught on enough that Petapixel asked the question, Who Owns a Photo Expanded by Generative Fill?.

Further

I Tested AI Photo Apps Like Adobe Firefly and Midjourney on Strangers: Joanna Stern at the Wall Street Journal (video)

Reimagine app for correcting old photos: With denoise and generative fill technologies, can we do a better job of repairing scans of old, damaged photos? This app specializes in just that.

Paragraphica: Now to blow your mind a little: Paragraphical is a “context-to-image camera that uses location data and artificial intelligence to visualize a ‘photo’ of a specific place and moment. The camera exists both as a physical prototype and a virtual camera that you can try.”

Let’s Connect

Thanks again for reading and recommending Photo AI to others who would be interested. Send any questions, tips, or suggestions for what you’d like to see covered at jeff@jeffcarlson.com. Are these emails too long? Too short? Let me know.